81 changed files with 303 additions and 1175 deletions

BIN

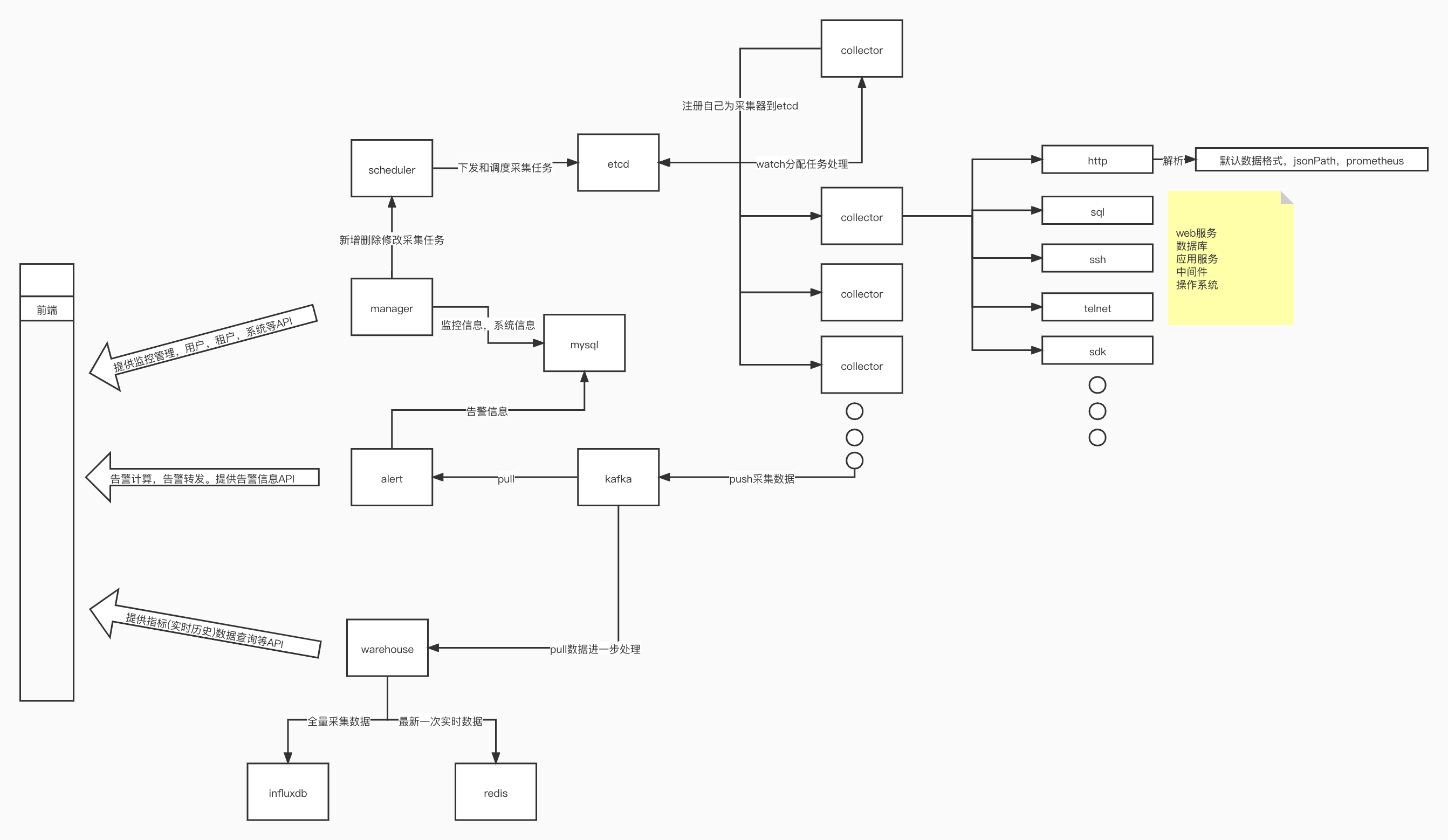

Architecture.jpg

+ 5

- 9

README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 9

- 2

alerter/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 11

alerter/src/main/java/com/usthe/alert/AlerterDataQueue.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 5

alerter/src/main/java/com/usthe/alert/calculate/CalculateAlarm.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 80

alerter/src/main/java/com/usthe/alert/entrance/KafkaDataConsume.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 24

alerter/src/main/java/com/usthe/alert/entrance/KafkaMetricsDataDeserializer.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 1

alerter/src/main/resources/META-INF/spring.factories

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 44

assembly/collector/assembly.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 109

assembly/collector/bin/startup.sh

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 18

assembly/server/bin/shutdown.sh

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 1

- 13

collector/README.md

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 19

collector/plugins/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 48

collector/plugins/sample-plugin/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 12

collector/plugins/sample-plugin/src/main/java/com/usthe/collector/plugin/SameClass.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 14

collector/plugins/sample-plugin/src/main/java/com/usthe/plugin/sample/ExportDemo.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 77

- 6

collector/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 146

collector/server/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 19

collector/server/src/main/java/com/usthe/collector/Collector.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 23

collector/server/src/main/java/com/usthe/collector/dispatch/DispatchConfiguration.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 57

collector/server/src/main/java/com/usthe/collector/dispatch/entrance/http/CollectJobController.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 62

collector/server/src/main/java/com/usthe/collector/dispatch/export/KafkaDataExporter.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 18

collector/server/src/main/java/com/usthe/collector/dispatch/export/KafkaMetricsDataSerializer.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 12

collector/server/src/main/java/com/usthe/collector/plugin/SameClass.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 20

collector/server/src/main/java/com/usthe/collector/plugin/TestPlugin.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 19

collector/server/src/main/resources/application.yml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 6

collector/server/src/main/resources/banner.txt

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 79

collector/server/src/main/resources/logback-spring.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/collect/AbstractCollect.java → collector/src/main/java/com/usthe/collector/collect/AbstractCollect.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/collect/database/JdbcCommonCollect.java → collector/src/main/java/com/usthe/collector/collect/database/JdbcCommonCollect.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/collect/http/HttpCollectImpl.java → collector/src/main/java/com/usthe/collector/collect/http/HttpCollectImpl.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/collect/icmp/IcmpCollectImpl.java → collector/src/main/java/com/usthe/collector/collect/icmp/IcmpCollectImpl.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/collect/telnet/TelnetCollectImpl.java → collector/src/main/java/com/usthe/collector/collect/telnet/TelnetCollectImpl.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/CollectorProperties.java → collector/src/main/java/com/usthe/collector/common/CollectorProperties.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/cache/CacheCloseable.java → collector/src/main/java/com/usthe/collector/common/cache/CacheCloseable.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/cache/CacheDetectable.java → collector/src/main/java/com/usthe/collector/common/cache/CacheDetectable.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/cache/CacheIdentifier.java → collector/src/main/java/com/usthe/collector/common/cache/CacheIdentifier.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/cache/CommonCache.java → collector/src/main/java/com/usthe/collector/common/cache/CommonCache.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/cache/support/CommonJdbcConnect.java → collector/src/main/java/com/usthe/collector/common/cache/support/CommonJdbcConnect.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/http/CustomHttpRequestRetryHandler.java → collector/src/main/java/com/usthe/collector/common/http/CustomHttpRequestRetryHandler.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/http/HttpClientPool.java → collector/src/main/java/com/usthe/collector/common/http/HttpClientPool.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/http/IgnoreReqCookieSpec.java → collector/src/main/java/com/usthe/collector/common/http/IgnoreReqCookieSpec.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/common/http/IgnoreReqCookieSpecProvider.java → collector/src/main/java/com/usthe/collector/common/http/IgnoreReqCookieSpecProvider.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/CollectDataDispatch.java → collector/src/main/java/com/usthe/collector/dispatch/CollectDataDispatch.java

+ 3

- 3

collector/server/src/main/java/com/usthe/collector/dispatch/CommonDispatcher.java → collector/src/main/java/com/usthe/collector/dispatch/CommonDispatcher.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/DispatchConstants.java → collector/src/main/java/com/usthe/collector/dispatch/DispatchConstants.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/DispatchProperties.java → collector/src/main/java/com/usthe/collector/dispatch/DispatchProperties.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/MetricsCollect.java → collector/src/main/java/com/usthe/collector/dispatch/MetricsCollect.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/MetricsCollectorQueue.java → collector/src/main/java/com/usthe/collector/dispatch/MetricsCollectorQueue.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/MetricsTaskDispatch.java → collector/src/main/java/com/usthe/collector/dispatch/MetricsTaskDispatch.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/WorkerPool.java → collector/src/main/java/com/usthe/collector/dispatch/WorkerPool.java

+ 83

- 0

collector/src/main/java/com/usthe/collector/dispatch/entrance/internal/CollectJobService.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 2

- 2

collector/server/src/main/java/com/usthe/collector/dispatch/entrance/http/CollectResponseEventListener.java → collector/src/main/java/com/usthe/collector/dispatch/entrance/internal/CollectResponseEventListener.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 56

- 0

collector/src/main/java/com/usthe/collector/dispatch/export/MetricsDataExporter.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/timer/HashedWheelTimer.java → collector/src/main/java/com/usthe/collector/dispatch/timer/HashedWheelTimer.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/timer/Timeout.java → collector/src/main/java/com/usthe/collector/dispatch/timer/Timeout.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/timer/Timer.java → collector/src/main/java/com/usthe/collector/dispatch/timer/Timer.java

+ 1

- 1

collector/server/src/main/java/com/usthe/collector/dispatch/timer/TimerDispatch.java → collector/src/main/java/com/usthe/collector/dispatch/timer/TimerDispatch.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 1

- 1

collector/server/src/main/java/com/usthe/collector/dispatch/timer/TimerDispatcher.java → collector/src/main/java/com/usthe/collector/dispatch/timer/TimerDispatcher.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/timer/TimerTask.java → collector/src/main/java/com/usthe/collector/dispatch/timer/TimerTask.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/dispatch/timer/WheelTimerTask.java → collector/src/main/java/com/usthe/collector/dispatch/timer/WheelTimerTask.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/util/CollectorConstants.java → collector/src/main/java/com/usthe/collector/util/CollectorConstants.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/util/JsonPathParser.java → collector/src/main/java/com/usthe/collector/util/JsonPathParser.java

+ 0

- 0

collector/server/src/main/java/com/usthe/collector/util/SpringContextHolder.java → collector/src/main/java/com/usthe/collector/util/SpringContextHolder.java

+ 9

- 0

collector/src/main/resources/META-INF/spring.factories

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

collector/server/src/test/java/com/usthe/collector/collect/telnet/TelnetCollectImplTest.java → collector/src/test/java/com/usthe/collector/collect/telnet/TelnetCollectImplTest.java

+ 1

- 1

common/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 12

- 11

manager/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 10

- 10

manager/src/main/java/com/usthe/manager/service/impl/MonitorServiceImpl.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 18

manager/src/main/java/com/usthe/manager/support/GlobalExceptionHandler.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 1

- 2

pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 9

- 0

script/assembly/package-build.sh

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 4

- 1

assembly/server/assembly.xml → script/assembly/server/assembly.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 0

assembly/collector/bin/shutdown.sh → script/assembly/server/bin/shutdown.sh

+ 0

- 0

assembly/server/bin/startup.sh → script/assembly/server/bin/startup.sh

+ 9

- 2

warehouse/pom.xml

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 81

warehouse/src/main/java/com/usthe/warehouse/entrance/KafkaDataConsume.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 24

warehouse/src/main/java/com/usthe/warehouse/entrance/KafkaMetricsDataDeserializer.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 134

warehouse/src/main/java/com/usthe/warehouse/store/InfluxdbDataStorage.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 5

- 6

warehouse/src/main/java/com/usthe/warehouse/store/RedisDataStorage.java

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||

+ 0

- 2

warehouse/src/main/resources/META-INF/spring.factories

|

||

|

||

|

||

|

||

|

||

|

||

|

||

|

||